Um Ihnen die Funktionen unseres Online-Shops uneingeschränkt anbieten zu können setzen wir Cookies ein. Weitere Informationen

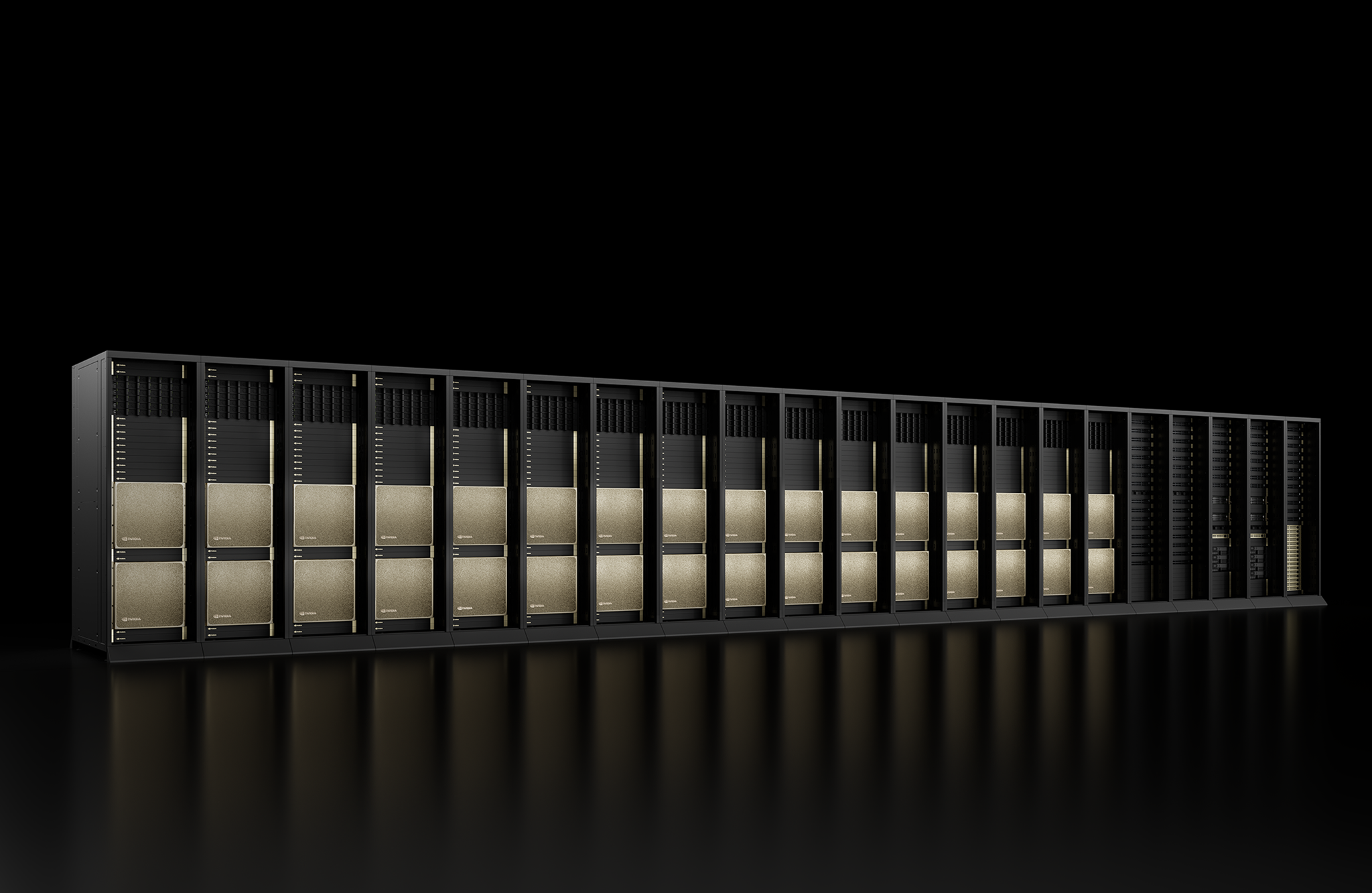

NVIDIA DGX SuperPOD™

Ihre produktionsreife AI Factory

Warum DGX SuperPOD™?

Künstliche Intelligenz krempelt ganze Branchen um – von der Forschung über

die Industrie bis hin zum Kundenservice. Doch während die Visionen groß sind,

sieht die Realität oft anders aus: Cloud-Kosten schießen durch die Decke, IT-

Landschaften sind zersplittert, und viele KI-Projekte verheddern sich in

Infrastrukturproblemen, bevor sie überhaupt Wirkung entfalten können.

Der NVIDIA DGX SuperPOD™ ist die Antwort: eine sofort nutzbare Plattform,

die mit Ihren Anforderungen wächst, Kosten planbar macht und auf

modernster GPU-Technologie basiert. Die Vorteile im Überblick:

Unsere Rolle als DGX Partner

"Wir begleiten von Standort bis Betrieb"

Technologie die Maßstäbe setzt

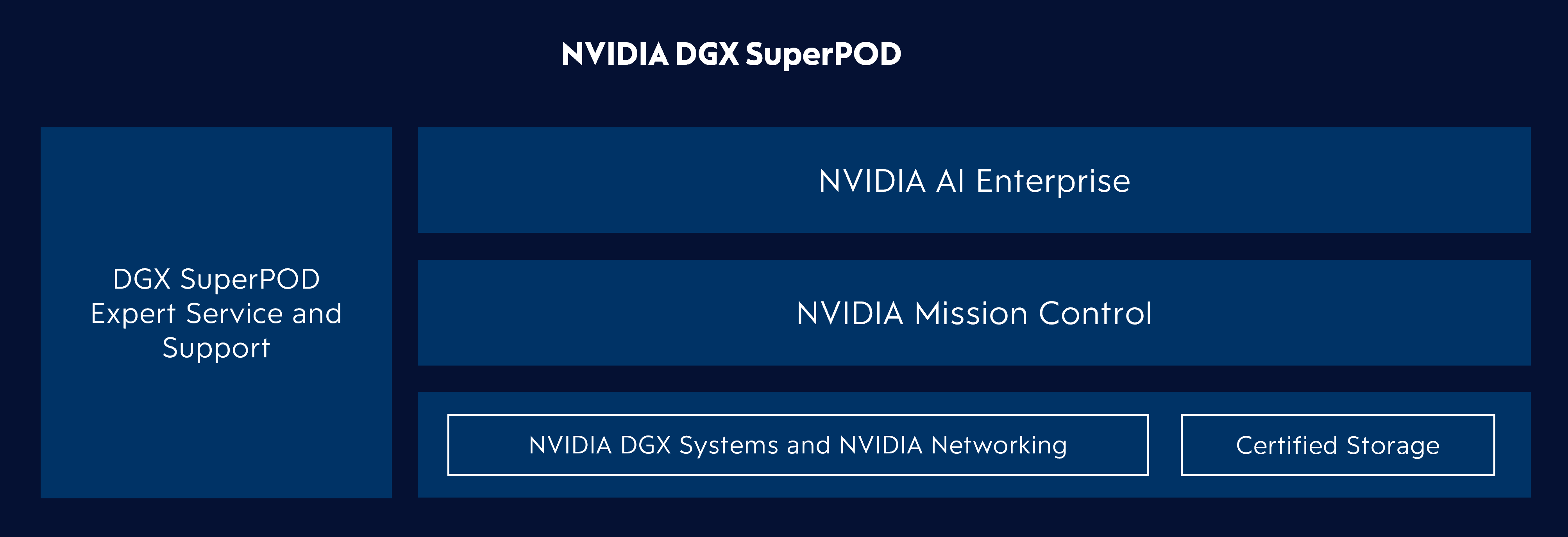

DGX SuperPOD™ Software

Der DGX SuperPOD™ ist eine integrierte Hardware- und Softwarelösung. Die enthaltene Software ist von Grund auf für KI

optimiert. Von den beschleunigten Frameworks und der Workflow-Verwaltung bis hin zur Systemverwaltung und den Low-

Level-Optimierungen des Betriebssystems ist jeder Teil des Stacks darauf ausgelegt, die Leistung und den Wert des DGX

SuperPOD™ zu maximieren.

NVIDIA AI Enterprise

NVIDIA AI Enterprise ist der Enterprise-Software-Stack für produktive KI, der nicht nur optimierte Frameworks und Container bereitstellt, sondern vor allem umfassenden Support durch NVIDIA. Statt als Nutzer allein mit Open-Source-Tools zu arbeiten, profitieren Unternehmen von zertifizierten Workflows, regelmäßigen Updates, Security-Patches und direktem Zugang zu NVIDIA-Experten. Die Suite umfasst bewährte Frameworks wie RAPIDS, TAO Toolkit, TensorRT™ und den Triton Inference Server, die für den Einsatz auf dem DGX SuperPOD™ validiert und unterstützt sind.

NVIDIA Mission Control™

NVIDIA Mission Control™ ist eine Betriebsplattform für KI-Rechenzentren, die Infrastruktur, Workloads und Facility-Systeme zentral überwacht und automatisiert steuert. Sie sorgt für höhere Effizienz, Ausfallsicherheit und eine schnellere Bereitstellung von KI-Workloads – ohne dass tiefes Spezialwissen im Betrieb nötig ist. Durch Funktionen wie automatische Job-Wiederherstellung, energieoptimierte Leistungsprofile und anpassbare Dashboards beschleunigt Mission Control den Weg von Experimenten zur produktiven KI.

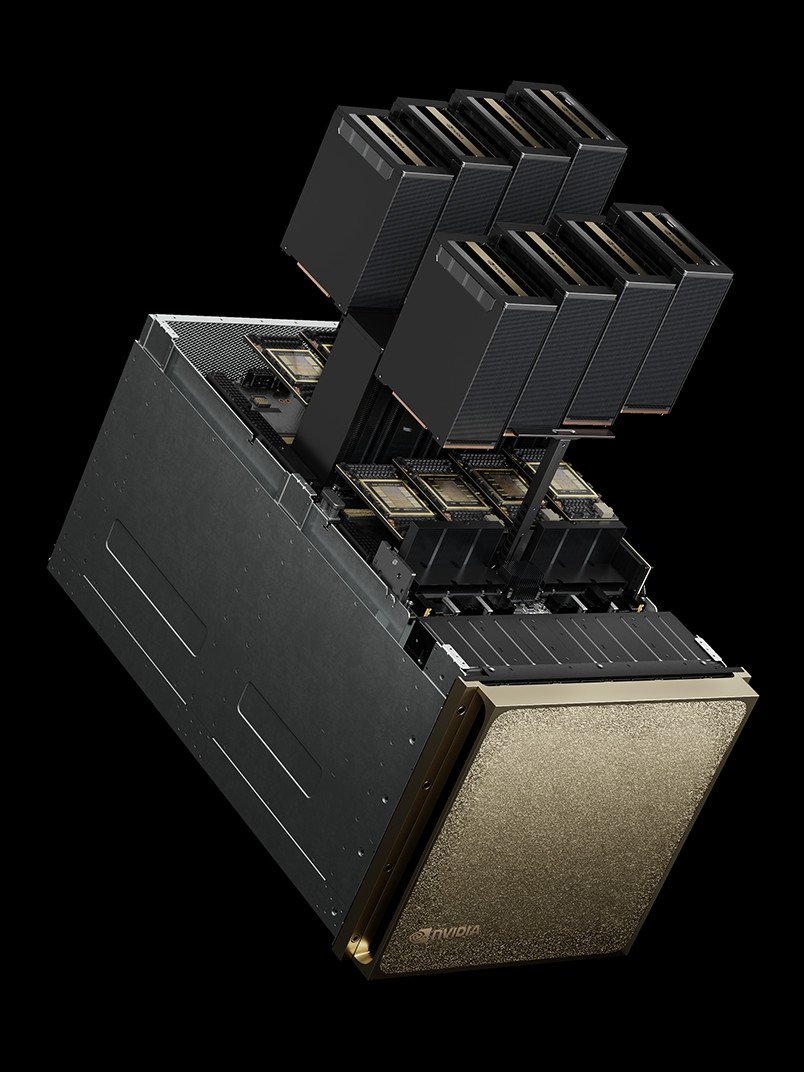

Die wichtigsten Komponenten des DGX SuperPOD™

Der NVIDIA DGX SuperPOD™ ist die Referenzarchitektur für produktionsreife KI.

Mit Scalable Units (SU) oder dem GB200 NVL72 Rackscale-System skalieren Sie Ihre AI Factory flexibel –

von 32 Nodes bis zu Exaflop-Klassen. Garantierte Leistung, planbare Erweiterung, schnelle Bereitstellung.

Viele DGX SuperPOD™ Lösungen werden von großen und bekannten Kunden, im Bereich Data Center und

Cloud-Service, auf der ganzen Welt genutzt.

Zwei Wege zur Skalierung Ihrer AI Factory

NVIDIA DGX™ B200

NVIDIA GB200 NVL72

FAQ

Er eignet sich ideal für das Training und die Inferenz großer KI-Modelle (z. B. Large Language Models), Generative AI, Big Data Analytics, Simulationen, autonome Systeme, medizinische Bildverarbeitung und viele weitere KI-getriebene Forschungsvorhaben und Unternehmensanwendungen.

Die Architektur basiert auf sogenannten Scalable Units (SUs), jeweils bestehend aus 32 DGX B200 Systemen, die über ein ultraschnelles InfiniBand-Backbone vernetzt sind. Je nach Bedarf können mehrere SUs zu einem Großcluster verbunden und zentral verwaltet werden, was eine flexible, zukunftssichere Skalierung ermöglicht.

Zu den Vorteilen zählen höchste Leistung (bis zu 3x schneller als Vorgänger, 12x energieeffizienter), niedrige Latenz, garantierte Quality-of-Service (QoS), volle Kontrolle über sensible Daten, flexible Ressourcenzuteilung durch Multi-Instance GPU (MIG) sowie planbare, langfristig günstige Betriebskosten (TCO). Anders als reine Cloud-Lösungen ermöglicht der SuperPOD™ maximale Auslastung und individuelle Anpassung an die eigenen Anforderungen.

Nach Bereitstellung der benötigten Hardware-Komponenten kann die komplette Implementierung und Inbetriebnahme erfahrungsgemäß innerhalb von 4 bis 6 Wochen erfolgen – inklusive Integration in bestehende Infrastrukturen und produktiv nutzbarem Betrieb.

Mit DELTA als zertifiziertem NVIDIA Partner profitieren Unternehmen von persönlicher und unabhängiger Beratung, vollumfänglicher Projektbetreuung (Architektur, Colocation, Netzwerk, Support) sowie Zusatz-Services wie Schulungen und schnellen Servicezeiten – alles maßgeschneidert für die jeweiligen Geschäftsanforderungen.

Es stehen verschiedene Support-Level zur Verfügung: Von Enterprise Business Standard über Business Critical bis zu individuellen Supportpaketen. Immer inklusive: dedizierter Ansprechpartner, 24/7-Erreichbarkeit, Premium Technical Account Manager (PTAM) sowie Zugriff auf NVIDIA KI-Experten (DGXperts) für alle strategischen und operativen Fragestellungen.

DELTA plant, gestaltet und realisiert maßgeschneiderte Colocation-Lösungen in Top-Rechenzentren. Das umfasst Standortberatung, Network- und Compliance-Support, Integration, Migration sowie dauerhaftes Betriebsmanagement. Bei Bedarf begleiten wir auch Hybrid-Deployments aus OnPrem, Colocation und Cloud.

Ja, gemeinsam mit NVIDIA Financial Services bietet DELTA individuelle Finanzierungs- und Leasingmodelle, die einen schnellen, effizienten Einstieg in KI-Großprojekte ermöglichen, ohne die eigenen Budgets zu stören. So wird planbare Skalierung auch operativ möglich.

Nutzen Sie einfach unser Kontaktformular, fordern Sie eine persönliche Beratung oder eine Produktschau (Demo) an – unser Team meldet sich umgehend und beantwortet gerne individuelle Fragen!